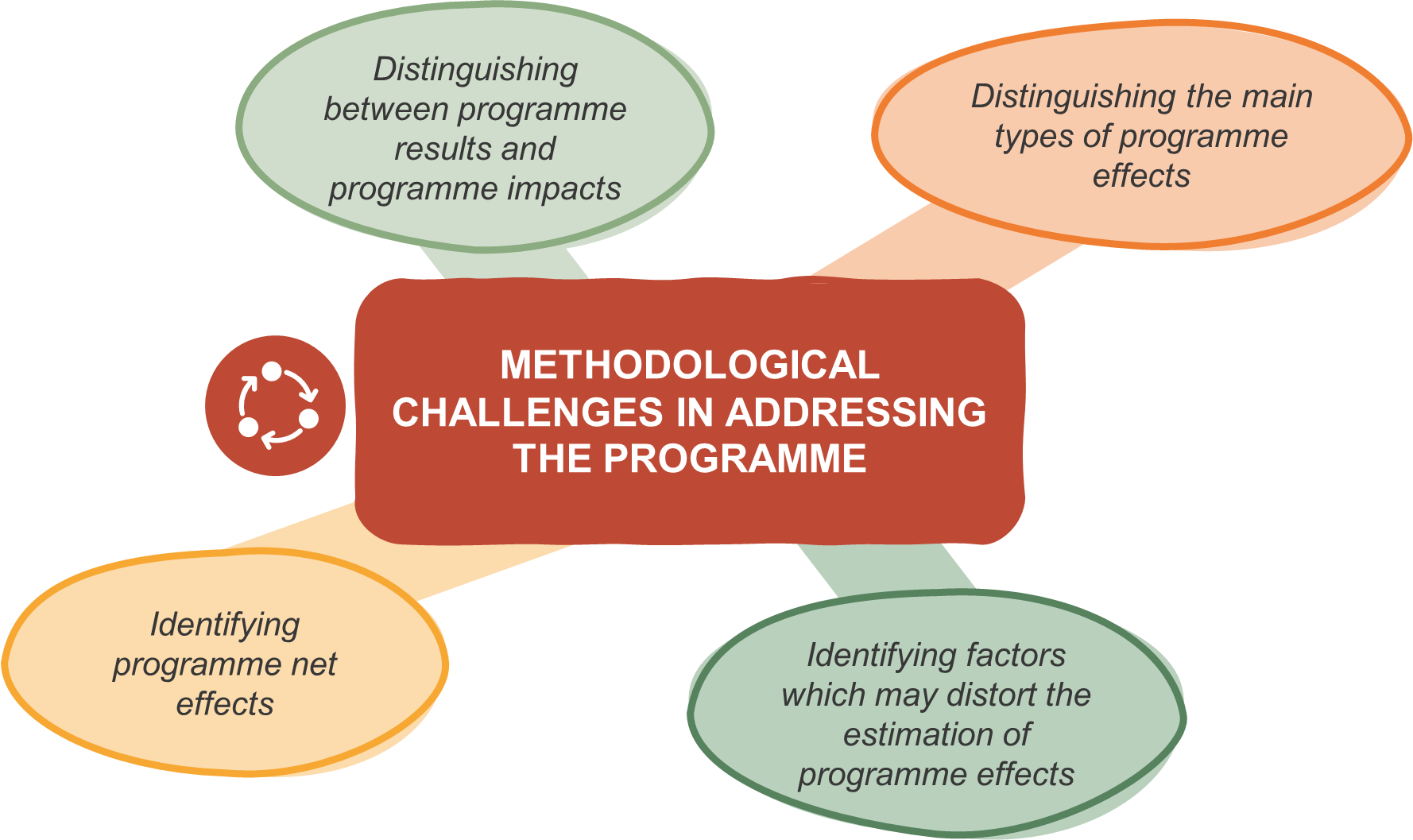

Once one understands what evaluation is, its role in the policy cycle, and who is involved, then comes the big task to perform it!

Evaluators face the daunting challenge of gathering all the available evidence and data analysing it, interpreting it and finally reporting their results. But, where to start?

What is a methodology anyways?

Before doing anything, we must first define what is an evaluation methodology. In short, the evaluation methodology is a tool to help better understand the steps needed to conduct a robust evaluation. An evaluation methodology covers the conceptualisation of the evaluation and the approach which will be used to try to understand the extent of the change and the reasons why it happened.

Demystifying the mysterious animal known as ‘counterfactual’

This last point, understanding what the policy has achieved and why, are two of the main objectives of this whole task. One should always find the following question as the primary point of departure for any evaluation:

‘What would have happened if the policy intervention was not introduced?’

Answering this question is only possible through a counterfactual based assessment. So, what is this mysterious ‘counterfactual’? Sounds complex right?

Don’t worry, understanding the concept is not that difficult. A counterfactual assessment is simply the assessment of what the situation would have been if the intervention were not introduced vs that of the current situation with the intervention. The absence of a policy intervention can also be referred to as the ‘policy-off’ situation. By comparing this ‘policy-off’ scenario and the real situations, it is possible to determine the net effects of the public intervention.

A counterfactual assessment can be applied to determine and identify the direct and indirect effects of the programme or policy:

- Direct effects are those which affect programme beneficiaries in an immediate way as a direct consequence of the programme’s support.

- Indirect effects refer to the programme’s effects spread throughout the economy, society, or environment, beyond the direct effects to beneficiaries of the public intervention.

Various tools can be used for the construction of a counterfactual situation: shift-share analysis, comparison groups, simulations using econometric models, etc.

In the beginning, or ‘baseline’, the situation with the policy intervention and the counterfactual situation are identical. If the intervention has an effect, they increasingly diverge.

Tools to teleport you to an alternate reality

So, what are these possible tools that can help evaluators assess and understand the policy and how do they differ?

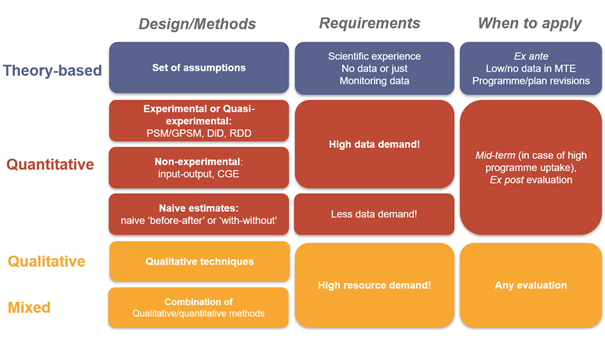

Evaluators must choose the right tool or technique based on the information and data available as well as the objectives and needs of the assessment. These tools are called evaluation methods and can be broken into different families.

Evaluation methods usually consist of procedures and protocols that ensure systemisation and consistency in the way evaluations are undertaken. Methods may focus on the collection or analysis of information and data; may be quantitative or qualitative; and may attempt to describe, explain, predict or inform actions. The choice of methods follows from the evaluation questions being asked and the mode of enquiry - causal, exploratory, normative, etc. Understanding a broad range of methods ensures that evaluators can select a suitable method depending on their needs and purpose.

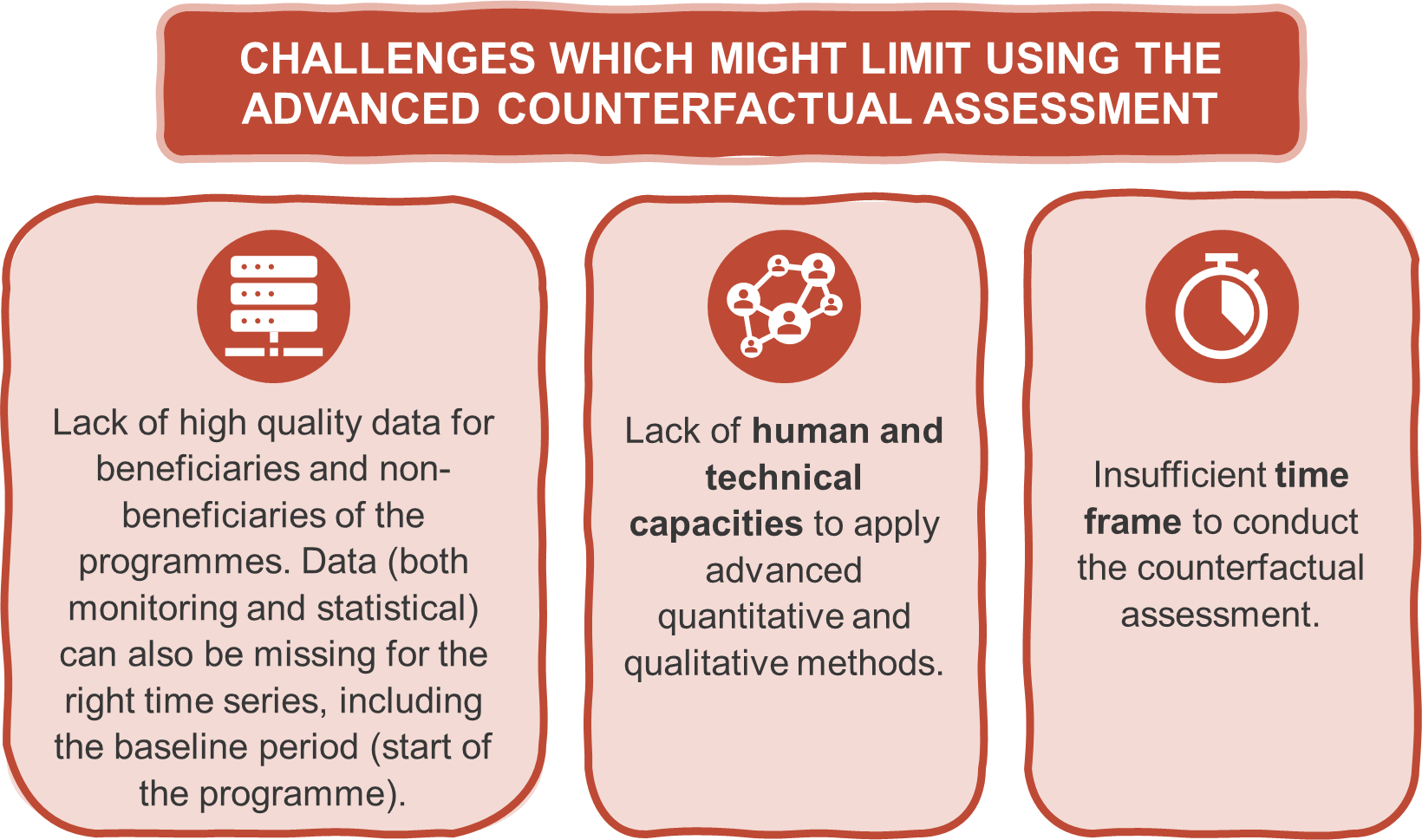

A variety of evaluation methods can be used in a counterfactual based assessment, but the robustness of findings can vary depending on which is chosen.

Getting to know the different families of evaluation methods

The two biggest families of evaluation methods are quantitative and qualitative methods.

The numbers family

There are several ways of designing an evaluation through quantitative methods, with each differing primarily in how the ‘policy-off’ situation is constructed.

- Experimental evaluation design is considered the ‘gold standard’ in evaluations. Randomised controlled experiments or the so-called ‘experimental design’, is where randomly selected groups receive support (beneficiaries) and a randomly selected control group does not (non-beneficiaries). Experimental or randomised designs are generally considered the most robust of the evaluation methodologies. However, conducting field experiments poses several methodical challenges.

- Quasi-experimental evaluation design is very similar to an experimental design; however it lacks one key element: random assignment of groups. A crucial issue in an evaluation based on quasi-experimental design is to identify a group of programme beneficiaries and a group of programme non-beneficiaries that are statistically identical in the absence of the programme support. If the two groups are identical (they have the same characteristics: size, geography, etc.), except one group participates in the programme and the other does not, then any difference in outcomes must be a result of the programme.

- Non-experimental evaluation design can be used when it is not possible to identify a suitable control group through the application of a quasi-experimental method. Under this design, programme beneficiaries are compared with programme non-beneficiaries using statistical or qualitative methods to account for differences between the two groups.

The statistical methods which can be used in quantitative evaluations are diverse and can include the following: propensity score matching (PSM), generalised propensity score matching (GPSM), Difference in difference (DiD), regression discontinuity design (RDD), computable general equilibrium (CGE).

- Naive estimates: In this approach the comparison groups are usually selected arbitrarily, leading to quantitative results that are statistically biased. Evaluations sometimes use this less robust approach, which does not allow for as rigorous knowledge about a specific programme, direct and indirect effects if they do not have sufficient data and control groups. A naïve estimate implies applying methods based on insufficient evidence or ad hoc surveys of a group of beneficiaries, opinions of administrative officials, etc. These techniques are in general unsuitable to appropriately address the issues generally considered crucial in any quantitative evaluation framework.

The person-based family

Qualitative evaluation methods tend to focus less on the numbers and more on the opinions and views of individuals. Similar to quantitative methods, qualitative methods are equally diverse in nature.

- Focus groups are a qualitative and participatory evaluation technique which allows a carefully selected group of stakeholders to discuss the results and impacts of the policy interventions. Focus groups should be facilitated by an external moderator and include a variety of people coming from different sub-groups of stakeholders (e.g. Managing Authority, implementing body, beneficiaries, independent experts). A focus group can be repeated separately for beneficiaries and non-beneficiaries and results can be compared. This evaluation method can be time consuming and requires a moderator with excellent facilitation skills. With respect to other qualitative methods, it has the advantage of allowing for in-depth discussion, while its main disadvantage is often caused by the inability to attract a diverse range of stakeholders.

- Interviews are structured conversations, based on questions and answers, between the evaluator and a selected evaluation stakeholder. Interviews can also be conducted so that they respect the counterfactual principle (i.e. having interviews with beneficiaries and non-beneficiaries and including questions on a hypothetical policy-off situation). Similarly, an advantage to this approach is the possibility to collect in depth information. However, this information can often be biased with a high degree of subjectivity. For interviews to be robust, the sample of interviewees must be representative and the questions which are asked must be based on careful desk research.

- Theory-based approaches: the ‘theory of change’, which is frequently applied in theory-based evaluations, can be defined as a detailed description of a set of assumptions that explains both the steps leading to the long-term goal and the connections between the policy or programme’s activities and outcomes that occur at each step. This evaluation approach implies the premise that programmes are based on explicit or implicit theory about how and why the programme will work. In order to use ‘theory of change’, the theoretically causal links between the interventions and its specific effects, described in steps, should be logical and empirically testable. So how can this be achieved? Evaluators must first develop hypothesis that can be tested through critical comparisons. This relies on the stakeholders’ experience with how these types of programmes seem to work while also taking into consideration prior evaluation research findings. In practice, theory-based evaluations seek to test programme theory by investigating if, how or why policies or programmes cause intended or observed outcomes. Testing the theories can be done on the basis of existing or new data, both quantitative and qualitative. Several frequently used data gathering techniques can be applied (e.g. key informant interviews, focus groups and workshops, or case studies).

/file/methodstablebbpng_enmethods_table_bb.png

When families converge and cross over

Quantitative and qualitative techniques sometimes converge in what is known as mixed evaluation approaches.

- Surveys are qualitative or mixed methods (qualitative and quantitative) applying a deductive analytical approach. What does this mean? It means that the information gathered from a representative sample is considered to depict the reality of the total population because the way the survey and its hypothesis are designed is built from the literature (i.e. from consolidated previous knowledge). Surveys can be conducted with beneficiaries and non-beneficiaries and hence can illustrate a counterfactual situation. Surveys can also potentially cover a large diverse range of stakeholders. With that said surveys are often highly resource demanding.

- Case studies can provide a detailed picture of a specific example through an intensive analysis of documents, statistical data, field observations and interviews. Case studies allow a detailed examination of the actual elements in line with the evaluation goals. The picture provided by a case study is often more reliable than the outputs from other tools in the context of the scarcity of basic data. Case studies provide an in depth point of view into a specific situation or area. Unfortunately, this means that their scope is limited and cannot be extrapolated to the whole population.

/file/methodbbpng_enmethod_bb.png

/file/challengesbbpng_enchallenges_bb.png